https://huggingface.co/spaces/akhaliq/Detectron2

import os

os.system('pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu102/torch1.9/index.html')

import gradio as gr

# check pytorch installation:

import torch, torchvision

print(torch.__version__, torch.cuda.is_available())

assert torch.__version__.startswith("1.9") # please manually install torch 1.9 if Colab changes its default version

# Some basic setup:

# Setup detectron2 logger

import detectron2

from detectron2.utils.logger import setup_logger

# import some common libraries

import numpy as np

import os, json, cv2, random

# import some common detectron2 utilities

from detectron2 import model_zoo

from detectron2.engine import DefaultPredictor

from detectron2.config import get_cfg

from detectron2.utils.visualizer import Visualizer

from detectron2.data import MetadataCatalog, DatasetCatalog

from PIL import Image

cfg = get_cfg()

cfg.MODEL.DEVICE='cpu'

# add project-specific config (e.g., TensorMask) here if you're not running a model in detectron2's core library

cfg.merge_from_file(model_zoo.get_config_file("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml"))

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 # set threshold for this model

# Find a model from detectron2's model zoo. You can use the https://dl.fbaipublicfiles... url as well

cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url("COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml")

predictor = DefaultPredictor(cfg)

def inference(img):

im = cv2.imread(img.name)

outputs = predictor(im)

v = Visualizer(im[:, :, ::-1], MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2)

out = v.draw_instance_predictions(outputs["instances"].to("cpu"))

return Image.fromarray(np.uint8(out.get_image())).convert('RGB')

title = "Detectron 2"

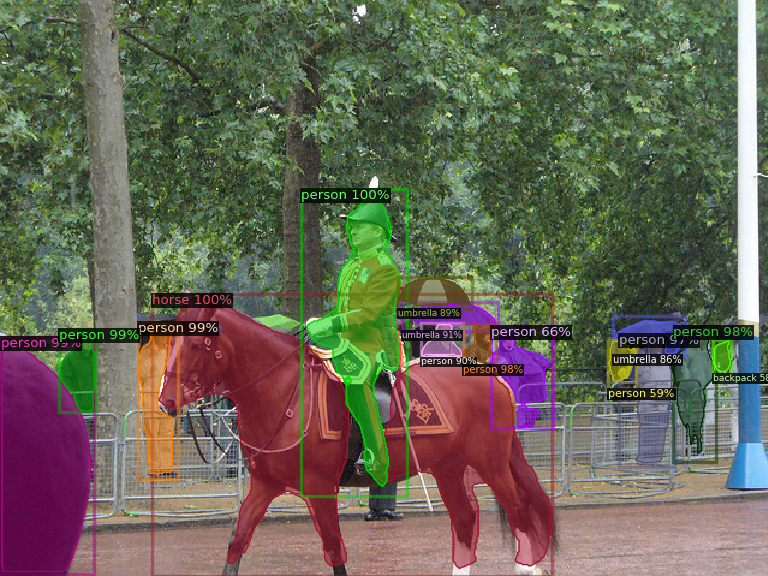

description = "Gradio demo for Detectron 2: A PyTorch-based modular object detection library. To use it, simply upload your image, or click one of the examples to load them. Read more at the links below."

article = "<p style='text-align: center'><a href='https://ai.facebook.com/blog/-detectron2-a-pytorch-based-modular-object-detection-library-/' target='_blank'>Detectron2: A PyTorch-based modular object detection library</a> | <a href='https://github.com/facebookresearch/detectron2' target='_blank'>Github Repo</a></p>"

examples = [['example.png']]

gr.Interface(inference, inputs=gr.inputs.Image(type="file"), outputs=gr.outputs.Image(type="pil"),enable_queue=True, title=title,

description=description,

article=article,

examples=examples).launch()