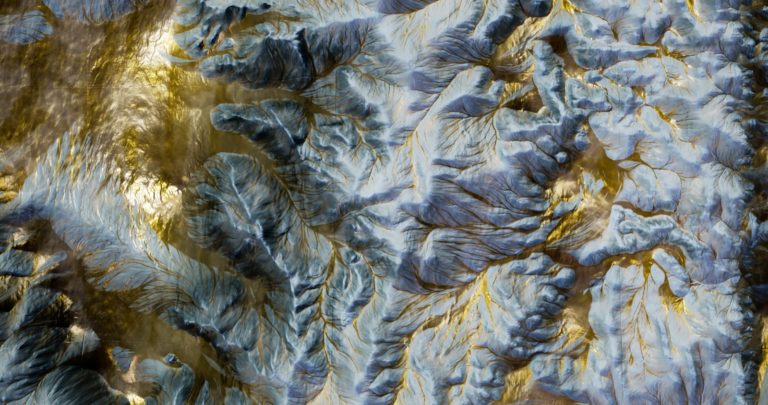

Created by Franz Rosati and conceived as an audiovisual performance, a series of digital artworks and a site specific installation, ‘Latentscape’ depicts exploration of virtual landscapes and territories, supported by music generated by machine learning tools trained on traditional, folk and pop music with no temporal or cultural limitations.

The work is an exploration of boundless territories, where streams of pixels take the form of cracks, erosions and mountains where colours and textures are representative of memories that the territories express. All elevation maps of landscapes are generated by different Machine Learning processes (GAN), trained with datasets composed of publicly available satellite imagery, mixed and weighted with datasets of different types, to mislead the training phase and lead to unexpected results.

While the concepts of map and border appear as mere theoretical and functional superstructure, the landscapes and territories with their nuances, inlets, breaks, colors and topological diversity along with sounds and music echoing ancient timbral landmarks, depict the meeting of human data in the border points, marking the landmarks of meeting and fusion of thoughts, memories and experiences.

Franz Rosati

StyleGAN and SGAN are the two GANs trained with satellite imagery as written in the .PDF and then used to generate respectively detail textures and heightmaps. The dataset is not random but mainly made of heightmaps taken from critical bordes zones such as Gaza, Armenia, Nepal, Somalia, Mexico. The materials produced by the GANs, approx 90GB of 2K and 4K images (team tried 8K but it was simply too much), are then normalized and worked on to be fit for Unreal Engine specification for landscapes. Unreal Engine 4.26 is the main platform used with custom landscape materials and specific parameters to adjust and transform the landscape by generating specific maps from the original Heightmap to enrich the terrain by colour and features, exploring different aesthetics.

All the sounds used in Latentscape are made with SampleRNN machine learning algorithm trained with custom datasets made from baroque and renaissance music, mixed with field recordings and both popular and traditional music from all over the world – carefully mixing mainly string instruments such as persian santoor, theorbo or harpsicord and gu-zheng, and voices coming from middle-east as well as western repertoire. Then Max4Live was used to organise the sounds, developing a multi-sampling random player, to generate dense masses of sounds produced by SampleRNN. Different epoch of generation are then used for different purposes, for example early epoch are pretty close to noise, and can be very interesting if used as impacts as well as room tones or background noises. The main timbral gradients used are strings, plucked and choirs.

Finally, the Live version of Latentscape, used for performance and installations, runs on a TouchDesigner patch used as a media server to manage 1TB circa of HAP Videos, running on a GTX 1060, and integrating several real-time procedural processing on heightmaps as interlude between scenes. All sounds are loaded into an Elektron Octatrack and ER-301 Sound Computer and arranged.The main difference between the Screen Based Output and the Live set is in the contemplative nature of the first one and the dynamic and narrative/cinematic nature of the second one.

See the performance live at aimusicfestival by Sonar at CCCB on October 28th, 2021.

https://www.franzrosati.com/lts-av-live-set/

Software

Visuals

- Unreal Engine [Scene building, Surfacing, Sequence and Scenario rendering]

- Quadspinner GAEA [heightmaps adaptation and terrain masks extraction]

- TouchDesigner [Live visuals, Dataviz layout production]

- FFMPEG [Trimming, editing, final encoding]

- Adobe Premiere [final edit, color correction for short movie]

Audio Production

- SampleRNN [sound generation]

- Ableton Live 10 / Max 4 Live [sounds organization]

- Reaper [Ambisonic Spatialization]

- Cubase 10.5 [mix and editing]

AI/ML [Tensorflow – Pytorch]

Hardware

Studio Setup

- PC Workstation Intel i9900K / nvidia RTX2080 / 64GB Ram

Concert Setup

- Elektron Octatrack [sound management, preset manager for audio/visuals]

- ER301 Sound Computer [sound management, live granular processing]

- TouchDesigner[real-time video assets management]

Installation/Film Setup

- Screens + Brightsign Media Players