For the year 2022, it’s remarkable how much visual generation is still based on decades-old algorithms. But here’s one to watch – voraciously experimental media artist Robert Henke is into noise as his next jam. That makes it a perfect time to revisit some of the ubiquitous stuff that came before.

One thing to admire about Robert as an artist is his dedication what scientists, at least, call “basic research.” It’s a basic curiosity about the world around us. That ability to go back to the drawing board and explore something without a particular sense of what “art” it might make can lead down new creative paths. In music you’ll hear “woodshedding” as going off to focus on chops. In electronic music and media art and electronic visuals, it’s often useful to give yourself some space to focus on those chops for your brain, even if the process is messy or time-consuming.

Robert has made this public, but I know that it isn’t really ready to primetime. But I think that’s the reason to have a look now – while it’s in its most germinal state.

So apparently this all began on a laser project, but as Robert wrote on his Facebook page, there’s “… that moment when you spent 8 hours trying to solve a seemingly simple problem and no amount of thinking and no web research brought any real success and then you begin to realize that the problem simply has not mathematical solution for your given parameter space. . .. .”

That has led him to produce some custom noise algorithms, producing two-dimensional noise. He’s using gen~, the audio object for the Gen language in Cycling ’74’s Max. It’s a patcher environment that lets you work in low-level compiled code. Gen is itself worth a woodshed side trip:

gen~ for Beginners, Part 1: A Place to Start

https://docs.cycling74.com/max8/vignettes/gen_topic

Wait, why audio? Well, it’s basically just there to do some low-level math efficiently; Jitter is used to visualize the results. Robert is quick to mention that he likes working in Max for its comfort level – in other words, you do tend to be more productive when you work inside an environment you know.

It’s been fun watching this evolve over the last couple of weeks:

And there’s already a nascent page up on that nerdy oasis that is the Robert Henke / Monolake personal website. There, he’s got a clearer description:

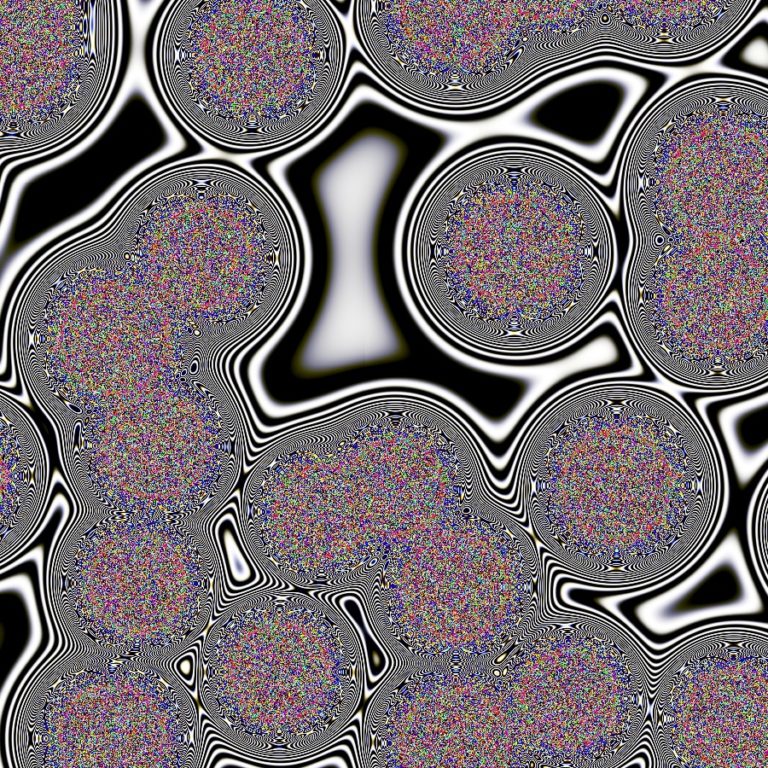

For a laser graphics project several methods of random noise distributions in two dimensional spaces have been explored. Instead of using standard libraries providing the known Voronoi, Perlin etc. noise algorithms, I started to write own variants.

The images on this page are a byproduct of the development. I needed a pixel based visualisation to verify that the code works as intended. The final code will be a ‘black box’ accepting X and Y positions as input and a parameter set defining the topology and it will return a float value that can be used to control various aspects of laser drawings as a function of momentary beam position. The colorisation in the rendered images here is obtained by slight parameter variations for the R,G,B channels and is there for pure aesthetic reasons.

Check it out:

Exploring 2D noise patterns

And since that’s just a teaser, I sure bookmarked it to watch for more.

Frankly, it’s about time we got some back-to-basics experimentation with noise – especially since algorithms like Perlin noise are themselves the result of exactly this sort of experimental side trip. At the same time, for all the cool capabilities of new graphics chipsets and whatnot – as you know I’m glad to celebrate on this here website – a lot of the lack of aesthetic progress is down to a whole lot of old-school algorithm use.

In other words, you know how acid techno now sounds a lot like the original acid techno? Welp, there you are – just substitute “procedural landscape generation.”

That being said, if this sort of visual inspires you, your best bet – ironically – is probably to do exactly what Robert was trying to get away from. (He does say “variants” – and note, too, that the other thing he did was to get away from standard libraries.) But yeah, understanding something like Perlin Noise and how it evolved is not a bad foundation in how to forge your own direction in this sort of math-made eye candy.

Daniel Shiffman, who comes from a math background rather than art, is uniquely talented at making math accessible to artists. I got a whole lot from following Dan’s work on creative coding environment Processing in its Java iteration, but it’s really nice now to be able to use it via JavaScript.

And here’s a decent look at Perlin noise from the perspective of procedural landscape generation.

Also worth reading, here is a brilliantly easy-to-follow discussion on noise, with some nice reflections on nature:

https://thebookofshaders.com/11/

Perlin Noise comes from Ken Perlin, and as it happens his motivation was a whole lot like Robert’s evidently was – he was dissatisfied with the look of the algorithms already used at the time. It started with his work at MAGI, aka Mathematical Applications Group – a New York-based firm that went from evaluating nuclear radiation exposure to, you know, Disney animation. Here’s how it happened, according to an early 2000s presentation:

I first started to think seriously about procedural textures when I was working on TRON at MAGI in Elmsford, NY, in 1981. TRON was the first movie with a large amount of solid shaded computer graphics. This made it revolutionary. On the other hand, the look designed for it by its creator Steven Lisberger was based around the known limitations of the technology.

…

Working on TRON was a blast, but on some level I was frustrated by the fact that everything looked machine-like. In fact, that became the “look” associated with MAGI in the wake of TRON. So I got to work trying to help us break out of the “machine-look ghetto.”

One of the factors that influenced me was the fact that MAGI SynthaVision did not use polygons. Rather, everything was built from boolean combinations of mathematical primitives, such as ellipsoids, cylinders, truncated cones. As you can see in the illustration on the left, the lightcycles are created by adding and subtracting simple solid mathematical shapes.

This encouraged me to think of texturing in terms not of surfaces, but of volumes. First I developed what are now called “projection textures,” which were also independently developed by a quite a few folks. Unfortunately (or fortunately, depending on how you look at it) our Perkin-Elmer and Gould SEL computers, while extremely fast for the time, had very little RAM, so we couldn’t fit detailed texture images into memory. I started to look for other approaches.

The first thing I did in 1983 was to create a primitive space-filling signal that would give an impression of randomness. It needed to have variation that looked random, and yet it needed to be controllable, so it could be used to design various looks. I set about designing a primitive that would be “random” but with all its visual features roughly the same size (no high or low spatial frequencies).

I ended up developing a simple pseudo-random “noise” function that fills all of three-dimensional space. A slice out of this stuff is pictured to the left. In order to make it controllable, the important thing is that all the apparently random variations be the same size and roughly isotropic. Ideally, you want to be able to do arbitrary translations and rotations without changing its appearance too much. You can find my original 1983 code for the first version here.

My goal was to be able to use this function in functional expressions to make natural-looking textures. I gave it a range of -1 to +1 (like sine and cosine) so that is would have a dc component of zero. This would make it easy to use noise to perturb things, and just “fuzz out” to zero when scaled to be small.

He has a beautiful way of thinking about it, too – that noise is a “seasoning,” boring on its own, like salt. (Then again, Robert’s approach here might contract that.) Perlin also explains that “The fact that noise doesn’t repeat makes it useful the way a paint brush is useful when painting.”

The interesting twist that I think doesn’t get repeated as much in the Tron CGI lore is that it’s not so much Perlin noise and texturing that probably represented the greatest breakthrough, but the fact that the code used to accomplish it is the moment the shader seems to be born. (If Ken is wrong here, CDM would presumably be the place to find out that there’s an earlier example.)

In late 1983 I wrote a language to allow me to execute arbitrary shading and texturing programs. For each pixel of an image, the language took in surface position and normal as well as material id, ran a shading, lighting and texturing program, and output color. As far as I’ve been able to determine, this was the first shader language in existence (as my grandmother would have said, who knew?).

I treated normal perturbation, local variations in specularity, nonisotropic reflection models, shading, lighting, etc. as just different forms of procedural texture – I didn’t see any reason to make a big distinction between them. I presented this work first at a course in SIGGRAPH 84, and then as a paper in SIGGRAPH 85. Because the techniques were so simple, they quickly got adopted throughout the industry. By around 1988 noise-based shaders where de rigeur in commercial software.

To me, it’s Perlin’s later work on “Hypertexture” that’s really interesting – the 1989 project with student Eric Hoffert, which used the same procedural texture techniques to create entire 3D landscapes. Hypertextures are Perlin’s Bitches Brew to the Kind of Blue that is Perlin Noise.

Go ahead, read that paper, too. Jeez, 1989 journal articles look kind of like they were published in 1889 now.

https://ohiostate.pressbooks.pub/app/uploads/sites/45/2017/09/perlin-hypertexture.pdf

As for Pelrin Noise, it lives on. Prof. Shiffman and Prof. Perlin have been colleagues at New York University. And for his part, Ken Perlin has kept improving his algorithm. Here’s a 2002 paper on making it better. (Subtly embedded in the paper title is the answer to “what does Ken Perlin call Perlin Noise?”)

Improving Noise

There’s Java code, which my browser was afraid of for security reasons but I promise you isn’t malware:

https://mrl.cs.nyu.edu/~perlin/noise/

Ken Perlin is teaching now at NYU – but if you can’t afford an NYU degree, you can also just go down a massive linkhole on their website and take the time you save to mess around with hypertextures and noise:

https://mrl.cs.nyu.edu/~perlin/

There are plenty more “toys” than those two, but here you go:

https://mrl.cs.nyu.edu/~perlin/experiments/hypertexture/

Oooh, hypertextures:

Cloth, by Ken.

Now I’ll be interested to see what Dan and Ken think of Robert’s creation – go!

What important moments in noise and procedural graphics history did I miss? (I’m sure I missed some.) Let us know.

Bonus round – John Madden just died and we still have pandemic lockdowns coming, so now is the ideal time for open-source chalk drawings from Ken Perlin, too!

Chalktalk is now Open Source!